Time series analysis is the process of gathering data over a period of time at regular intervals with the aim of discovering trends, seasonality, and residuals to help with event forecasting. Time series analysis entails attempting to anticipate future values while inferring what has happened to a set of data points in the past.

Time series data analysis enables the extraction of useful statistics and other data properties. Time series data are, as the name suggests, a set of observations that have been produced by repeating measurements across time. When you have that data, you may plot it on a graph to find out additional specifics about the data you’re tracking.

The rise and fall in temperature over the course of a day is a fairly simple time series analysis example. You may get a thorough picture of the rise and fall of the temperature in your area by keeping track of the precise temperature outside at hourly intervals for 24 hours. Then, if you are aware that the weather conditions, such as precipitation and humidity, would be generally similar the following day, you may make a more educated guess about what the temperature might be at particular times. Yes, this is an oversimplified example, but no matter what you’re talking about, the underlying structure is the same.

Such analysis requires that the pattern of observed time series data is identified. Once the pattern is established, it can be interpreted, integrated with other data, and used for forecasting (which is fundamental for machine learning). Machine learning is a type of artificial intelligence that allows computer programs to actually “learn” and become “smarter” over time, all without being explicitly programmed to do so.

Importance of time series analysis

As more connected devices are implemented and data is expected to be collected and processed in real-time, the ability to handle time series data has become increasingly significant. This is going to become especially important over the next few years, as the Internet of Things begins to play a more common role in all of our lives.

At its core, the Internet of Things is a term used to describe a network of literally billions of devices that are all connected together, both creating and sharing data at all times. In a personal context, we’ve already seen this begin to play out in “smart” homes across America. Your thermostat knows that when it gets to a certain temperature, it needs to lower the shades in a room to help control the temperature. Or your smart home hub knows that as soon as the last person leaves the house, it is to lock all the doors and turn all the lights off. It wouldn’t be able to get to this point were it not for an interconnected network of sensors that are sharing information with one another at all times – making time series analysis all the more important.

Among other things, time series analysis can be used to effectively:

- Illustrate that data points taken over time may have some sort of internal structure. There may be a trend or pattern to your data that likely otherwise would have gone undiscovered.

- Provide users with a better understanding of the past, thus putting them in a position to better predict the future.

That last point is particularly important, and it’s a big part of the reason why time series analysis is used in economics, statistics and in similar fields. If you know historical data for a particular stock, for example, and you know how it has traditionally performed given certain world events, you can better predict the price when similar events occur in the future. If you know that the event itself is about to happen (like a larger economic downturn), you can use that insight to make a better and more informed decision about whether to purchase the stock itself.

Since the analysis is based on data plotted against time, the first step is to plot the data and observe any patterns that might occur over time.

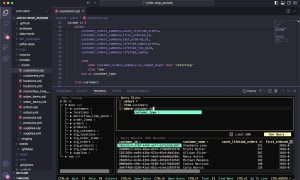

Programming languages used for analyzing time series

Among the many programming languages used for time series analysis and data science are:

Applications in various domains

Time series models are used to:

- Gain an understanding of the underlying forces and structure that produced the observed data.

- Fit a model and proceed to forecasting, monitoring or feedback and feedforward control.

Applications span sectors such as:

- Budgetary analysis

- Census analysis

- Economic forecasting

- Inventory studies

- Process and quality control

- Sales forecasting

- Stock market analysis

- Utility studies

- Workload projections

- Yield projections

Understanding data stationarity

Stationarity is an important concept in time series analysis. Many useful analytical tools and statistical tests and models rely on stationarity to perform forecasting. For many cases involving time series, it’s sometimes necessary to determine if the data was generated by a stationary process, resulting in stationary time series data. Conversely, sometimes it’s useful to transform a non-stationary process into a stationary process in order to apply specific forecasting functions to it. A common method of stationarizing a time series is through a process called differencing, which can be used to remove any trend in the series which is not of interest.

Stationarity in a time series is defined by a constant mean, variance, and autocorrelation. While there are several ways in which a series can be non-stationary (for instance, an increasing variance over time), a series can only be stationary in one way (when all these properties do not change over time).

Patterns that may be present within time series data

The variation or movement in a series can be understood through the following three components: trend, seasonality, and residuals. The first two components represent systematic types of time series variability. The third represents statistical noise (analogous to the error terms included in various types of statistical models). To visually explore a series, time series are often formally partitioned into each of these three components through a procedure referred to as time series decomposition, in which a time series is decomposed into its constituent components.

Trend

Trend refers to any systematic change in the level of a series — i.e., its long-term direction. Both the direction and slope (rate of change) of a trend may remain constant or change throughout the course of the series.

Seasonality

Unlike the trend component, the seasonal component of a series is a repeating pattern of increase and decrease in the series that occurs consistently throughout its duration. Seasonality is commonly thought of as a cyclical or repeating pattern within a seasonal period of one year with seasonal or monthly seasons. However, seasons aren’t confined to that time scale — seasons can exist in the nanosecond range as well.

Residuals

Residuals constitute what’s left after you remove the seasonality and trend from the data.

Methods of analyzing time series data

Time series analysis methods may be divided into two classes:

- Frequency-domain methods (these include spectral analysis and wavelet analysis)

In electronics, control systems engineering, and statistics, the frequency domain refers to the analysis of mathematical functions or signals with respect to frequency, rather than time. - Time-domain methods (these include autocorrelation and cross-correlation analysis)

Time domain refers to the analysis of mathematical functions, physical signals or time series of economic or environmental data, with respect to time. (In the time domain, correlation and analysis can be made in a filter-like manner using scaled correlation, thereby mitigating the need to operate in the frequency domain.)

Additionally, time series analysis methods may be divided into two other types:

- Parametric: The parametric approaches assume that the underlying stationary stochastic process has a certain structure which can be described using a small number of parameters (for example, using an autoregressive or moving average model). In these approaches, the task is to estimate the parameters of the model that describes the stochastic process.

- Non-parametric: By contrast, non-parametric approaches explicitly estimate the covariance or the spectrum of the process without assuming that the process has any particular structure.

Below is an overview of each of the above-mentioned methods.

Spectral analysis

Many time series show periodic behavior that can be very complex. Spectral analysis is a technique that allows us to discover underlying periodicities — it is one of the most widely used methods for data analysis in geophysics, oceanography, atmospheric science, astronomy, engineering, and other fields.

The spectral density can be estimated using an object known as a periodogram, which is the squared correlation between our time series and sine/cosine waves at the different frequencies spanned by the series. To perform spectral analysis, the data must first be transformed from time domain to frequency domain.

Learn more about spectral analysis.

Wavelet analysis

What is a Wavelet? A wavelet is a function that is localized in time and frequency, generally with a zero mean. It is also a tool for decomposing a signal by location and frequency. Consider the Fourier transform: A signal is only decomposed into its frequency components.

Wavelets are analysis tools mainly for time series analysis and image analysis (not covered here). As a subject, wavelets are relatively new (1983 to present) and synthesize many new/old ideas.

Autocorrelation

What is autocorrelation in time series data? Autocorrelation is a type of serial dependence. Specifically, autocorrelation is when a time series is linearly related to a lagged version of itself. When you have a series of numbers where values can be predicted based on preceding values in the series, the series is said to exhibit autocorrelation. By contrast, correlation is simply when two independent variables are linearly related.

Here’s why autocorrelation matters. Often, one of the first steps in any data analysis is performing regression analysis. However, one of the assumptions of regression analysis is that the data has no autocorrelation. This can be frustrating because if you try to do a regression analysis on data with autocorrelation, then your analysis will be misleading.

Additionally, some time series forecasting methods (specifically regression modeling) rely on the assumption that there isn’t any autocorrelation in the residuals (the difference between the fitted model and the data). People often use the residuals to assess whether their model is a good fit while ignoring that assumption that the residuals have no autocorrelation (or that the errors are independent and identically distributed or i.i.d). This mistake can mislead people into believing that their model is a good fit when in fact it isn’t.

Finally, perhaps the most compelling aspect of autocorrelation analysis is how it can help us uncover hidden patterns in our data and help us select the correct forecasting methods. Specifically, we can use it to help identify seasonality and trend in time series data. Additionally, analyzing the autocorrelation function (ACF) and partial autocorrelation function (PACF) in conjunction is necessary for selecting the appropriate ARIMA model for your time series prediction. Learn how to determine if your time series data has autocorrelation.

Cross-correlation

Cross correlation is a measurement that tracks the movements of two variables or sets of data relative to each other. In its simplest version, it can be described in terms of an independent variable, X, and two dependent variables, Y and Z. If independent variable X influences variable Y and the two are positively correlated, then as the value of X rises so will the value of Y.

If the same is true of the relationship between X and Z, then as the value of X rises, so will the value of Z. Variables Y and Z can be said to be cross correlated because their behavior is positively correlated as a result of each of their individual relationships to variable X.

Parametric vs. nonparametric tests

Parametric tests assume underlying statistical distributions in the data. Therefore, several conditions of validity must be met so that the result of a parametric test is reliable. Nonparametric tests are more robust than parametric tests. They are valid in a broader range of situations (fewer conditions of validity).

Nonparametric tests do not rely on any distribution. They can thus be applied even if parametric conditions of validity are not met. Parametric tests will have more statistical power than nonparametric tests. A parametric test is more able to lead to a rejection of H0. Most of the time, the p-value associated to a parametric test will be lower than the p-value associated to a nonparametric equivalent that is run on the same data.

Time series models

Generally speaking, there are three core models that you will be working with when performing time series analysis: autoregressive models, integrated models and moving average models.

An autoregressive model is one that is used to represent a type of random process. It is most commonly used to perform time series analysis in the context of economics, nature and more. Moving-average models are commonly used to model univariate time series, as the way the output variable is presented depends linearly on both the current and past values of an imperfectly predictable term. A traditional integrated model is one that lists all data points in time order.

How to do time series analysis will obviously vary depending on the model you choose to work with.

Time series analysis best practices

For the best results in terms of time analysis, it’s important to gain a better understanding of exactly what you’re trying to do in the first place. Remember that in a time series, the independent variable is often time itself and you’re typically using it to try to predict what the future might hold.

To get to that point, you have to understand whether or not time is stationary, if there is seasonality, and if the variable is autocorrelated.

Autocorrelation is defined as the similarity of observations as a function of the amount of time that passes between them. Seasonality takes a look at specific, periodic fluctuations. If a time series is stationary, its own statistical properties do not change over time. To put it another way, the time series has a constant mean and variance, regardless of what is happening with the independent variable of time itself. These are all questions that you should be answering prior to the performance of time series analysis.